[07/19 03:54:17] d2.evaluation.evaluator INFO: Total inference pure compute time: 0:05:37 (0.067663 s / iter per device, on 1 devices)

[07/19 03:54:17] d2.evaluation.coco_evaluation INFO: Preparing results for COCO format ...

[07/19 03:54:17] d2.evaluation.coco_evaluation INFO: Saving results to ./output/inference/coco_instances_results.json

[07/19 03:54:19] d2.evaluation.coco_evaluation INFO: Evaluating predictions with unofficial COCO API...

[07/19 03:54:19] d2.evaluation.fast_eval_api INFO: Evaluate annotation type *bbox*

[07/19 03:54:19] d2.evaluation.fast_eval_api INFO: COCOeval_opt.evaluate() finished in 0.77 seconds.

[07/19 03:54:19] d2.evaluation.fast_eval_api INFO: Accumulating evaluation results...

[07/19 03:54:19] d2.evaluation.fast_eval_api INFO: COCOeval_opt.accumulate() finished in 0.10 seconds.

[07/19 03:54:19] d2.evaluation.coco_evaluation INFO: Evaluation results for bbox:

| AP | AP50 | AP75 | APs | APm | APl |

|:------:|:------:|:------:|:------:|:------:|:------:|

| 55.244 | 83.255 | 60.158 | 36.447 | 63.029 | 72.722 |

[07/19 03:54:20] d2.evaluation.fast_eval_api INFO: Evaluate annotation type *keypoints*

[07/19 03:54:25] d2.evaluation.fast_eval_api INFO: COCOeval_opt.evaluate() finished in 5.44 seconds.

[07/19 03:54:25] d2.evaluation.fast_eval_api INFO: Accumulating evaluation results...

[07/19 03:54:25] d2.evaluation.fast_eval_api INFO: COCOeval_opt.accumulate() finished in 0.03 seconds.

[07/19 03:54:25] d2.evaluation.coco_evaluation INFO: Evaluation results for keypoints:

| AP | AP50 | AP75 | APm | APl |

|:------:|:------:|:------:|:------:|:------:|

| 63.696 | 85.916 | 69.254 | 59.249 | 72.083 |

[07/19 03:54:25] d2.engine.defaults INFO: Evaluation results for keypoints_coco_2017_val in csv format:

[07/19 03:54:25] d2.evaluation.testing INFO: copypaste: Task: bbox

[07/19 03:54:25] d2.evaluation.testing INFO: copypaste: AP,AP50,AP75,APs,APm,APl

[07/19 03:54:25] d2.evaluation.testing INFO: copypaste: 55.2440,83.2547,60.1577,36.4470,63.0290,72.7224

[07/19 03:54:25] d2.evaluation.testing INFO: copypaste: Task: keypoints

[07/19 03:54:25] d2.evaluation.testing INFO: copypaste: AP,AP50,AP75,APm,APl

[07/19 03:54:25] d2.evaluation.testing INFO: copypaste: 63.6964,85.9162,69.2536,59.2487,72.0832

?最终在detectron2/output?目录下生成了如下模型文件:

config.yaml inference model_0034999.pth model_0084999.pth model_0134999.pth model_0184999.pth model_0234999.pth

events.out.tfevents.1626131087.ubuntu.23424.0 last_checkpoint model_0039999.pth model_0089999.pth model_0139999.pth model_0189999.pth model_0239999.pth

events.out.tfevents.1626131279.ubuntu.24690.0 log.txt model_0044999.pth model_0094999.pth model_0144999.pth model_0194999.pth model_0244999.pth

events.out.tfevents.1626218003.ubuntu.28190.0 metrics.json model_0049999.pth model_0099999.pth model_0149999.pth model_0199999.pth model_0249999.pth

events.out.tfevents.1626218189.ubuntu.29394.0 model_0004999.pth model_0054999.pth model_0104999.pth model_0154999.pth model_0204999.pth model_0254999.pth

events.out.tfevents.1626218366.ubuntu.30715.0 model_0009999.pth model_0059999.pth model_0109999.pth model_0159999.pth model_0209999.pth model_0259999.pth

events.out.tfevents.1626218400.ubuntu.30986.0 model_0014999.pth model_0064999.pth model_0114999.pth model_0164999.pth model_0214999.pth model_0264999.pth

events.out.tfevents.1626218466.ubuntu.31493.0 model_0019999.pth model_0069999.pth model_0119999.pth model_0169999.pth model_0219999.pth model_0269999.pth

events.out.tfevents.1626218502.ubuntu.31791.0 model_0024999.pth model_0074999.pth model_0124999.pth model_0174999.pth model_0224999.pth model_final.pth

events.out.tfevents.1626262859.ubuntu.20243.0 model_0029999.pth model_0079999.pth model_0129999.pth model_0179999.pth model_0229999.pth

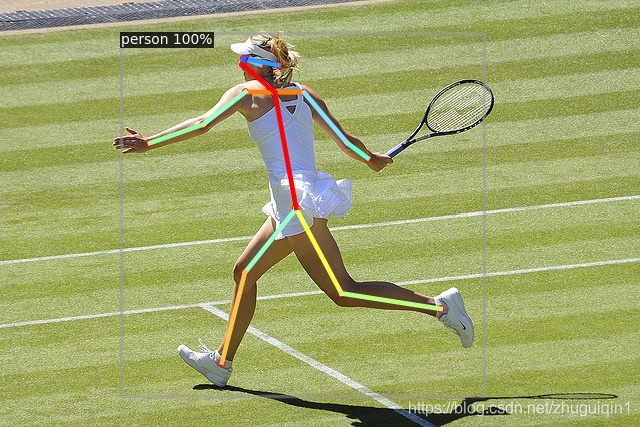

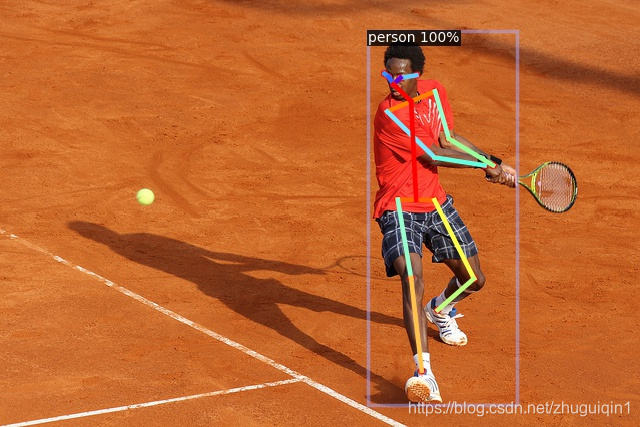

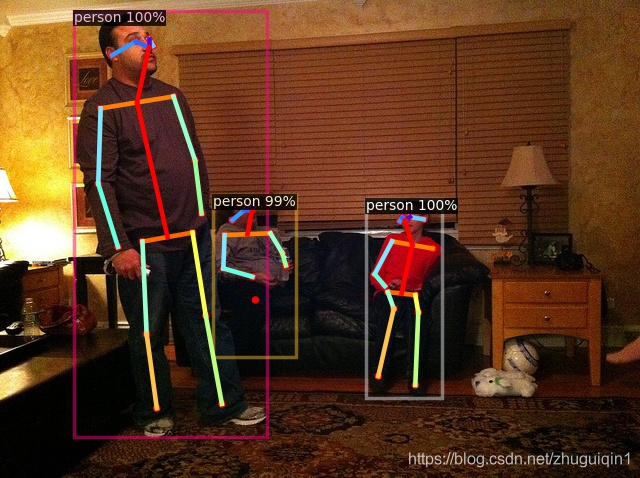

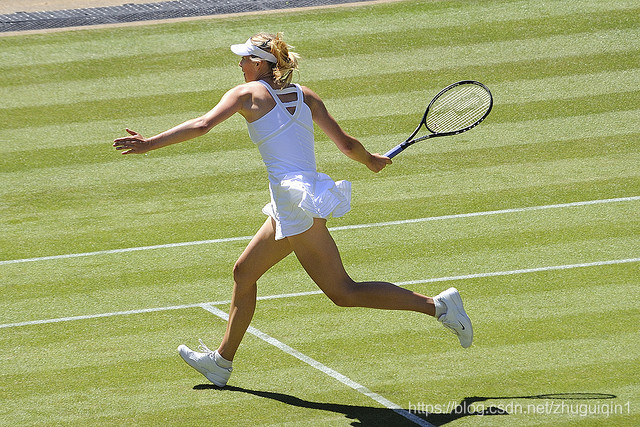

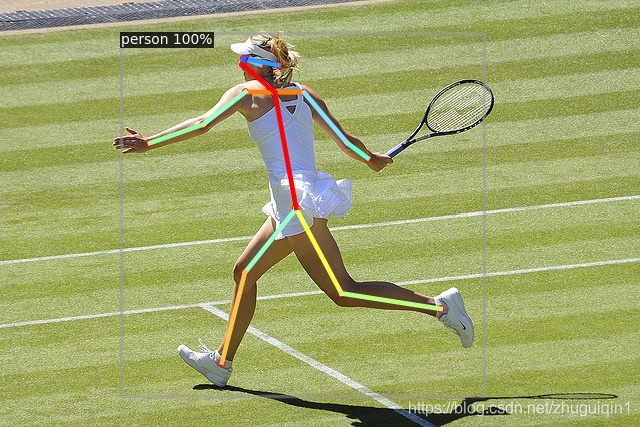

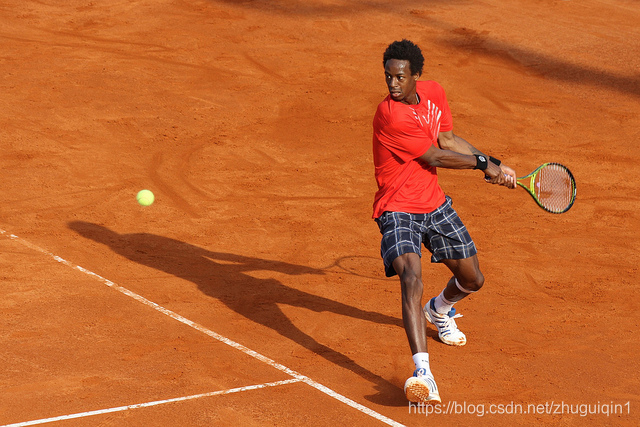

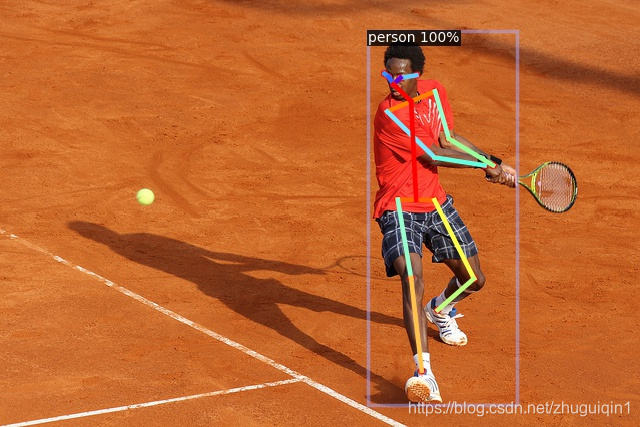

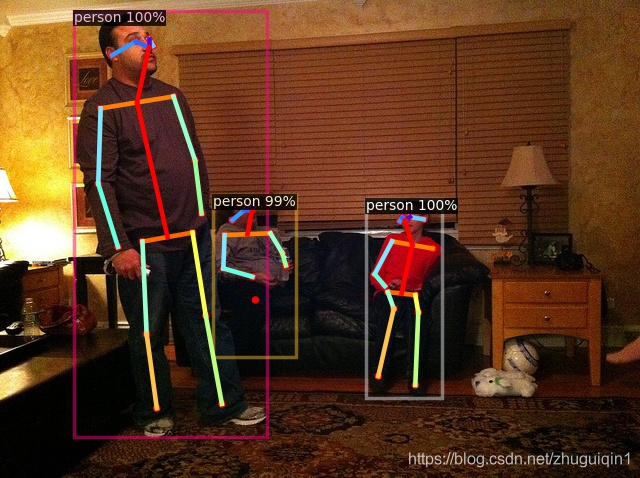

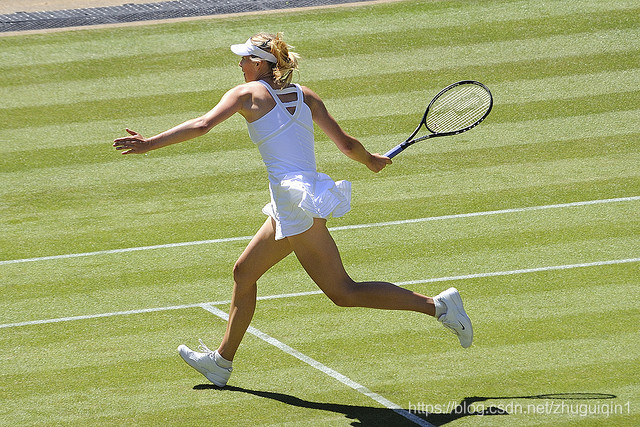

?我们以最后一次生成的模型做推理,测试下训练的模型效果,从下面三张图片看起来,非常棒的.

cd ./detectron2/demo

python demo.py --config-file ../configs/COCO-Keypoints/keypoint_rcnn_R_101_FPN_3x.yaml --output ./output --input ./keypoints_input/000000000552.jpg ./keypoints_input/000000001152.jpg ./keypoints_input/000000581918.jpg --opts MODEL.WEIGHTS ../output/model_final.pth

??

??

??

?? ?

?

??

??

cs

??

??

??

?? ?

? ??

??