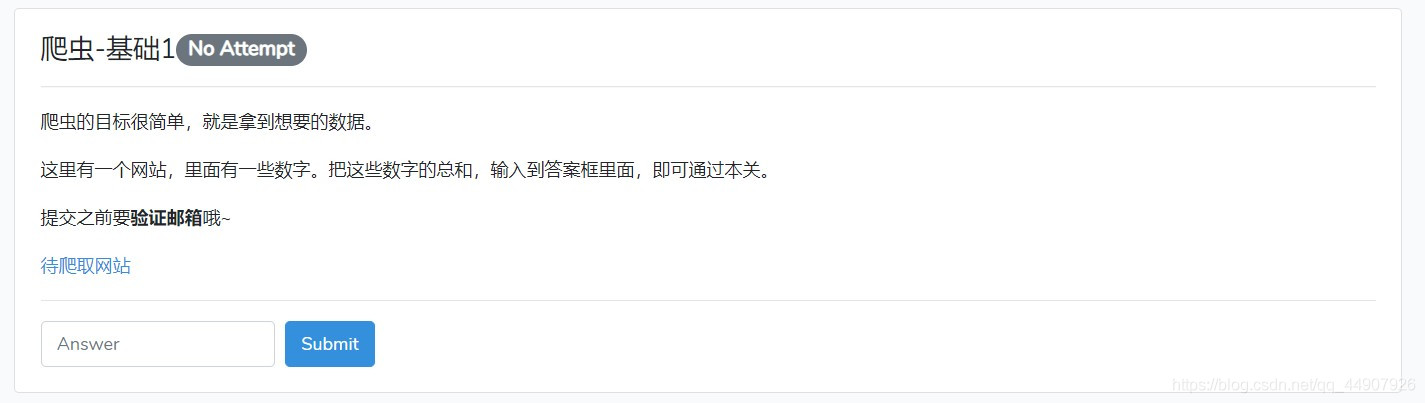

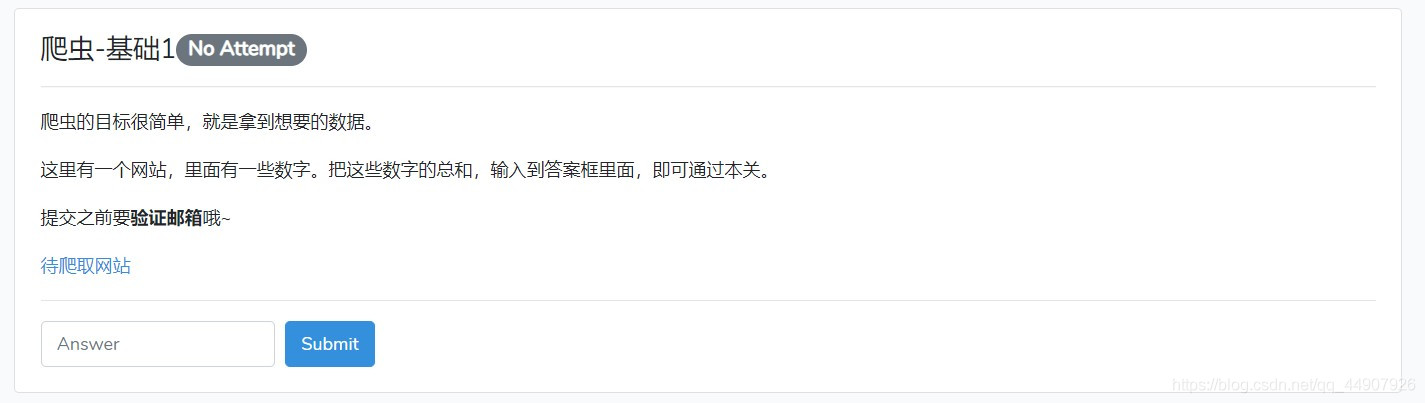

第一道:题目如下!

1.目标URL:http://www.glidedsky.com/,此网站中的第一题。

2.python中requests+etree+xpath实现:(cookie_str数据大家使用自己登陆后的cookies数据即可,安全起见,以下cookie_str为错误的!)

import requests

from lxml import etree

cookie_str="__gads=ID=22f6a2858602bd803-2259684f8fc40016:T=1604466297:RT=1604466297:S=ALNI_MYdpnvlmVSlQmK8R_5QLUQZrnNr6A; _ga=GA1.2.361744110.1604466299; footprints=eyJpdiI6IldHVmx6d1wvU2NYR0dJaFZOOHZQRW1RPT0iLCJ2YWx1ZSI6IkdrSmNXV2VlT2RERmZcL0FORXdVQlljc29taUxVMklvaGZ3cCtDM09QV1VHOEhjakRCNmhlNTg2ZWJBVk9pSjdOIiwibWFjIjoiZDhiYTgzMGZkODg5YjRkMmY4MzQ1MjZmMTUxOTU2YTY3YzAxYzk1OWY4NDkwNGExMjI2NDQ0YzY1NDkzMTg2ZCJ9; Hm_lvt_020fbaad6104bcddd1db12d6b78812f6=1604466299,1604466352,1604591546; _gid=GA1.2.414782633.1604591546; remember_web_59ba36addc2b2f9401580f014c7f58ea4e30989d=eyJpdiI6IllaSUdOTmxxWEJRSmJRXC9EbkhwKzRRPT0iLCJ2YWx1ZSI6IkRON1RlVTNJd3M5RTRRTVwvTTh3b0JBSW44RVVVeTlwaE5oQzR1Q1NjQlhseEVMbHRBa2owMlFTUzhyeXlFa1JGTHdKU1wvclVjS1Y3Slo5blptUW02ekhxSGxGTjhGK05hSzJPTjRKb0NROG56NkY5SUswOWFYSjhubklUemtaNmlqanp2bXAxRCt1K0o0ZGlaS0htYWlzbllsR1wvbGIrRURSeDhJV2QxNktYTT0iLCJtYWMiOiIyZDNjY2ZlY2MzM2YwZjc4MzVkNmQyMzQ4M2QwMDgxODkzNTE3YjFmZWFhMTk3MDkxNGJkNTI5Nzg3Njc2Mjc5In0%3D; _gat_gtag_UA_75859356_3=1; XSRF-TOKEN=eyJpdiI6InEyNkhoa1B1WHIrVkwyZzdrSTdlUXc9PSIsInZhbHVlIjoiUTM0VEpXc1IrMnlsWm9WOW9CQmpuVjNwUmhDY2JIZWE1WmZTWDNHXC9ucndDbUFsemNiU0ZiQ21qaWRGb2FaS00iLCJtYWMiOiIzZGQzMjEzNGM4ZDQyNjJlYjNkY2IxNWFmZGFiMTM1ZjdiZmQ0MmIxMDMyMDUwNWYzMWNmYWEzNTM2ZGY2ZWMwIn0%3D; glidedsky_session=eyJpdiI6IlJkQ0pvbTNFTHJNUFJvZkFZOWgrM0E9PSIsInZhbHVlIjoic25uKzJYV1hxcCtqcEViVjVRcnZzU045SVN5ek45MExlRm55YWxaT3M5aUZKaVBEV290M1F4VmFIVmM1UHcycyIsIm1hYyI6ImNlNmJjMWY2OTg3OWY1MTBjOTg0ZTRhZmEzZWMxOWVmODMxODk0ZTY0N2IwOTI2YjNiNmZjYmY3MmViZWUwMjEifQ%3D%3D; Hm_lpvt_020fbaad6104bcddd1db12d6b78812f6=1604591625"

cookie_dict = {i.split("=")[0]: i.split("=")[1] for i in cookie_str.split(";")}

url = "http://www.glidedsky.com/level/web/crawler-basic-1"

res = requests.get(url,cookies=cookie_dict)

data = etree.HTML(res.text)

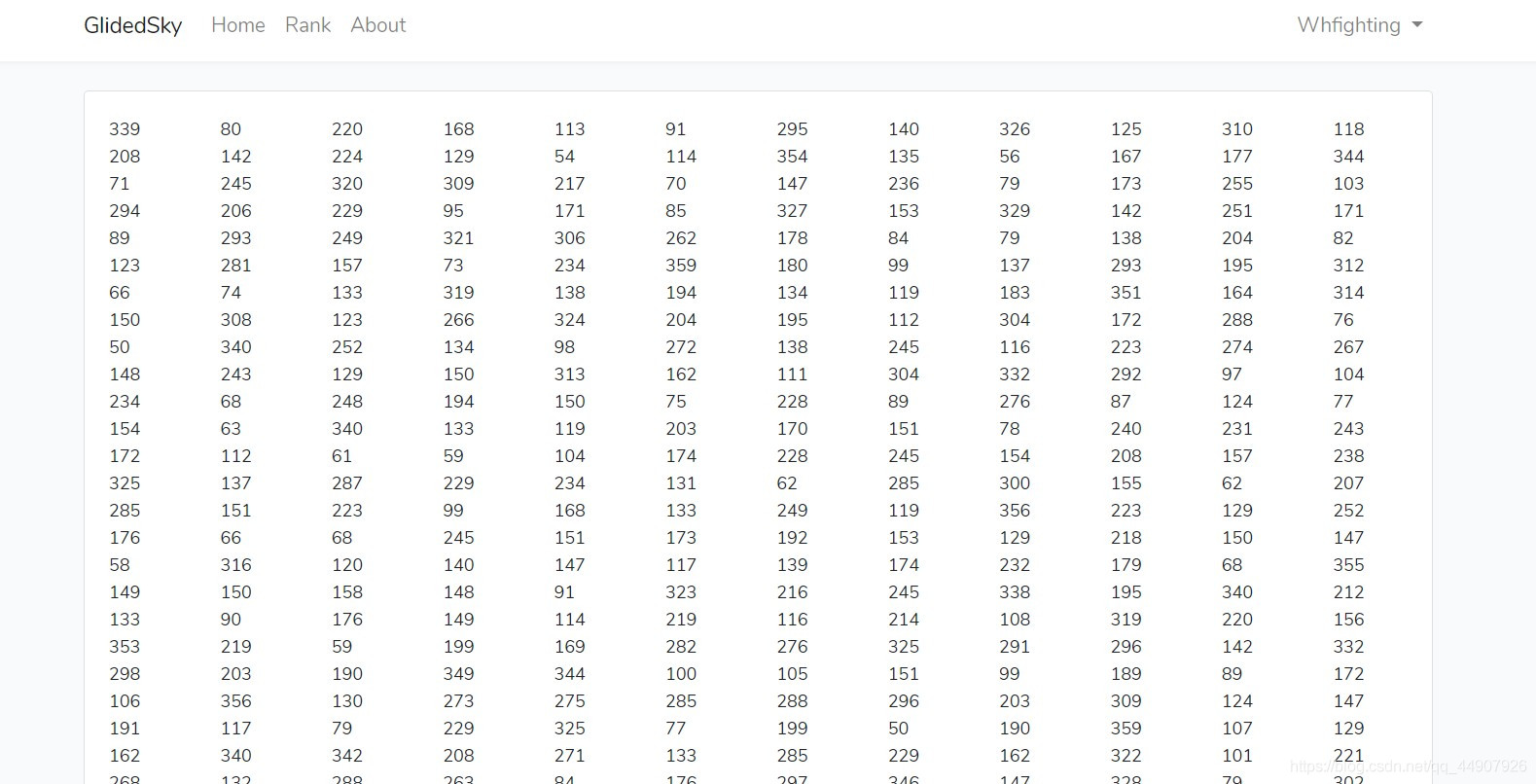

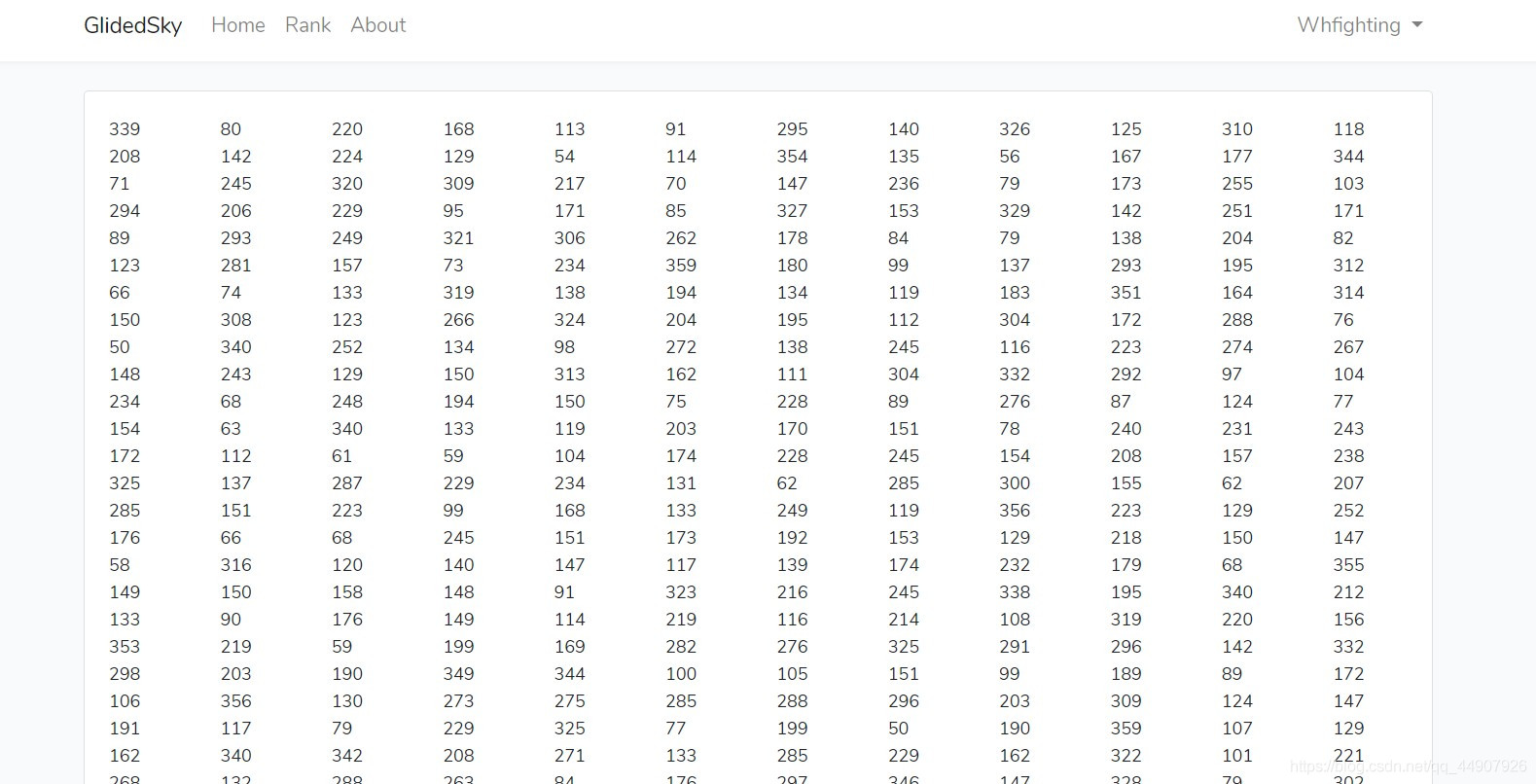

data_last = data.xpath("//div[@class='row']/div/text()")

data_new = sum(map(int,data_last))

print("合为:",data_new)

num = "".join(data_last).split()

a = 0

for i in num:

a+=int(i)

print("合为:",a)

cs