原理

https://en.wikipedia.org/wiki/Universal_approximation_theorem

https://www.youtube.com/watch?v=LQ33-GeD-4Y

https://arxiv.org/abs/1711.10561

https://arxiv.org/abs/physics/9705023

问题:

Universal approximation theorem:浅层前馈神经网络在隐藏层神经元数量足够多时,能够逼近任何一个连续函数

有约束的最优化问题:![min[(\widehat{u}_{xx} + a\widehat{u}_x -b)^2+ (\widehat{u}(0) - u_0)^2 + (\widehat{u}(1) - u_1)^2]](http://style.iis7.com/uploads/2021/06/0807152750.gif)

用只有一层隐藏层的全连接神经网络求解问题, ,神经网络输入为

,神经网络输入为 ,输出为

,输出为 。

。

隐藏层与输出层:

输出层求导:

将其带入有约束的最优化问题:

![min[(w_2f''(w_1x) + aw_2f'(w_1x) -b)^2+ (w_2f(0) - u_0)^2 + (w_2f(1) - u_1)^2]](http://style.iis7.com/uploads/2021/06/0807212756.gif)

训练集:数值解法求解问题获取训练集

用训练集训练神经网络,求解出权重,最终使得![[(w_2f''(w_1x) + aw_2f'(w_1x) -b)^2+ (w_2f(0) - u_0)^2 + (w_2f(1) - u_1)^2]\rightarrow 0](http://style.iis7.com/uploads/2021/06/0807222757.gif)

注:surrogate model代理模型

neurodiffeq 0.3.5

https://en.wikipedia.org/wiki/Lotka–Volterra_equations

https://pypi.org/project/neurodiffeq/

https://neurodiffeq.readthedocs.io/en/latest/

安装

anaconda下新建一个坏境

pip install torch

pip install neurodiffeq

# pip install -U neurodiffeq 更新到最新版本

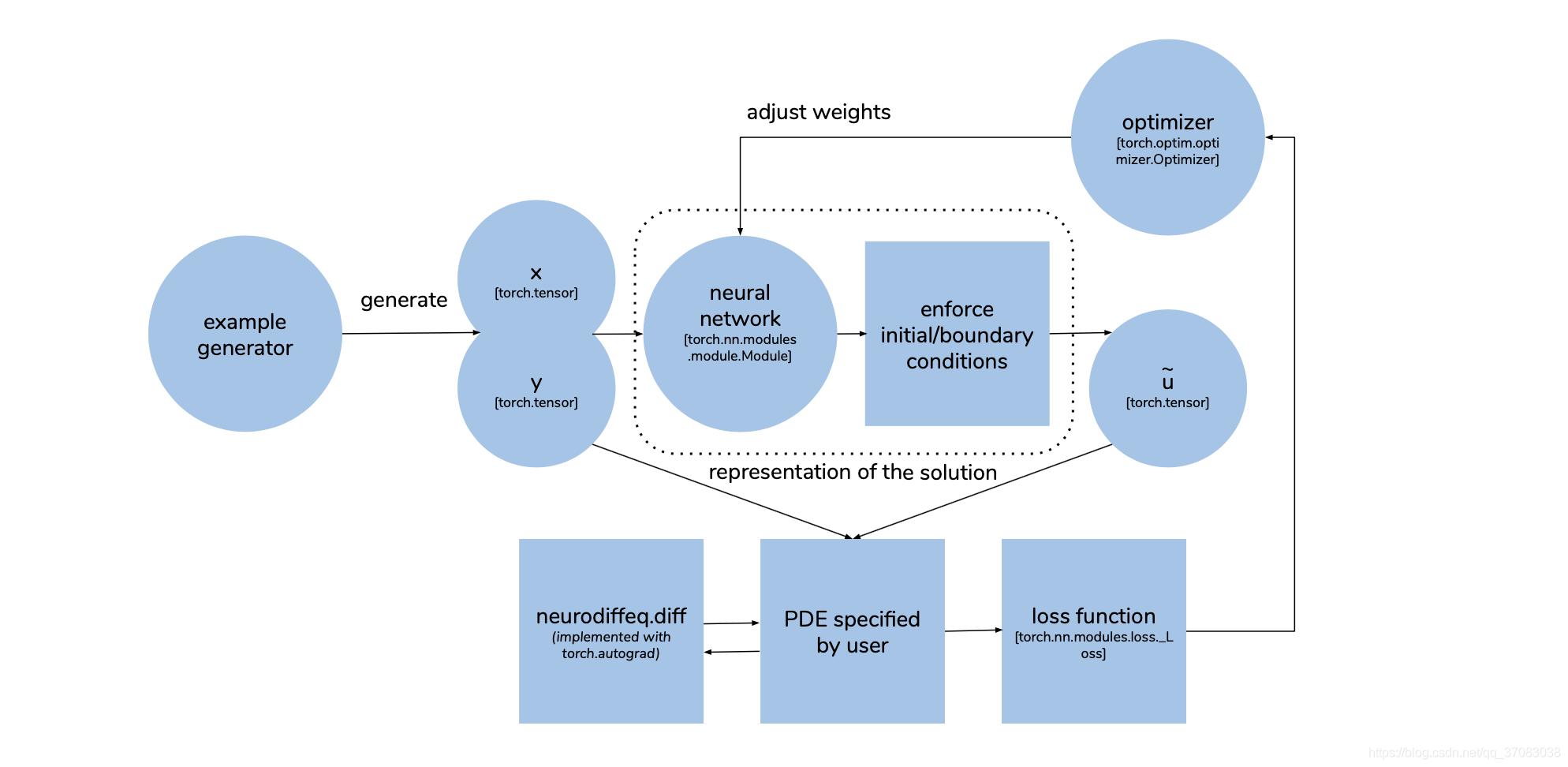

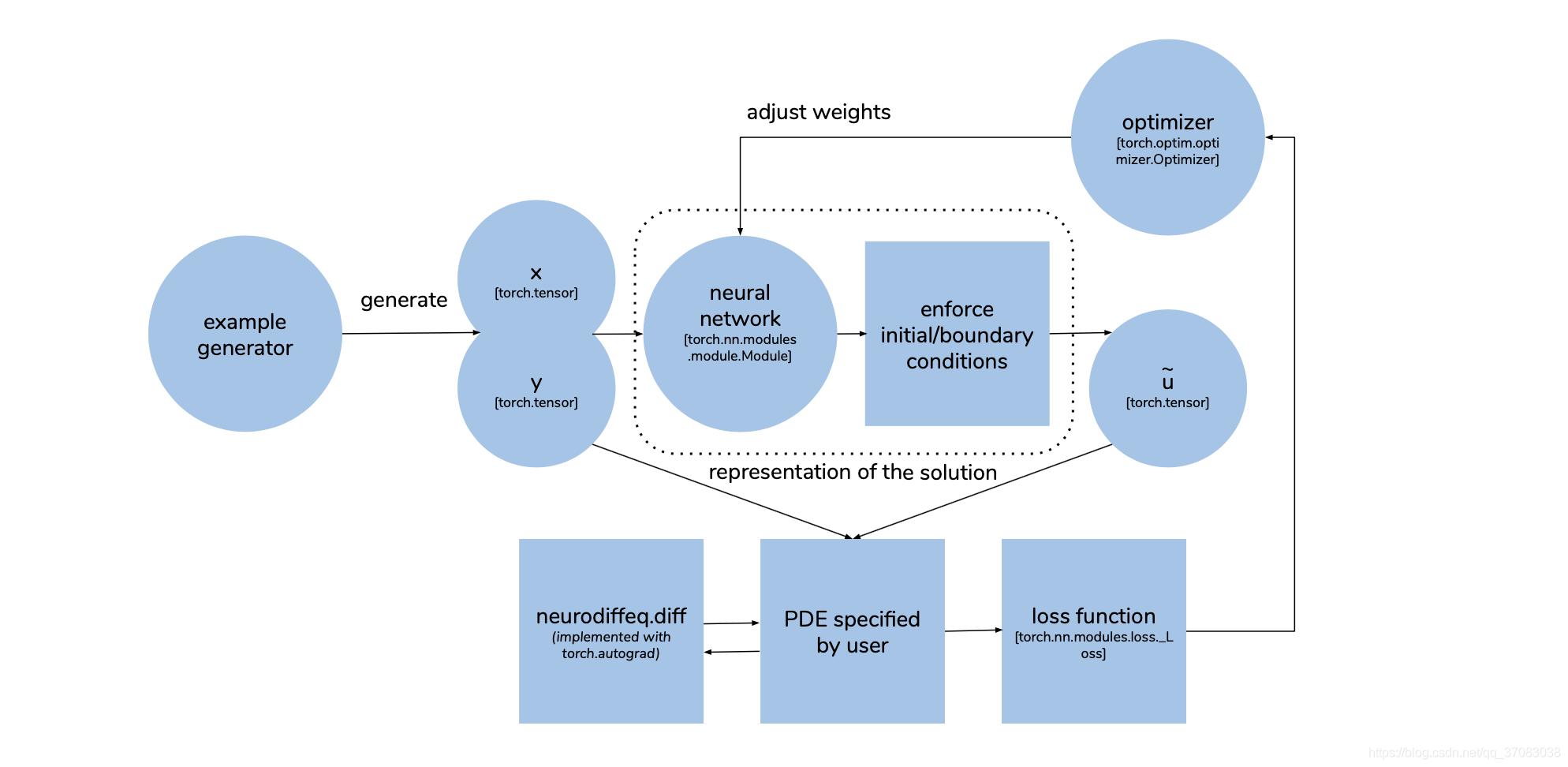

基于PyTorch用神经网络求解微分方程

example

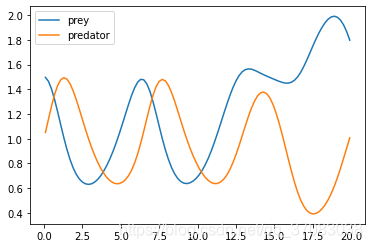

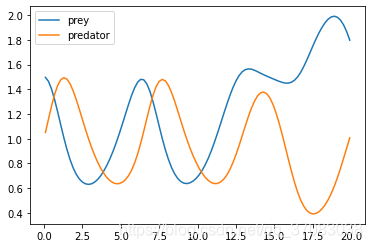

Lotka–Volterra equations,也称predator–prey equations

取参数为1

猎物x的初值为1.5,捕食者y的初值为1

from neurodiffeq import diff

from neurodiffeq.solvers import Solver1D

from neurodiffeq.conditions import IVP

from neurodiffeq.networks import FCNN, SinActv

import numpy as np

import matplotlib.pyplot as plt

# 定义ODE

def ode_system(u, v, t):

return [diff(u,t)-(u-u*v), diff(v,t)-(u*v-v)]

# 定义初始条件

conditions = [IVP(t_0=0.0, u_0=1.5), IVP(t_0=0.0, u_0=1.0)]

# 定义ANN,FCNN为全连接ANN

# 输入层神经元默认为1,输出层神经元默认为1,激活函数sin,隐藏层默认为(32,32)

# 定义两个全连接神经网络,一个神经网络输入为t输出为u,一个输入为t输出为v

nets = [FCNN(actv=SinActv), FCNN(actv=SinActv)]

# 定义求解器,训练数据t位于[0.1,12.0]

solver = Solver1D(ode_system, conditions, t_min=0.1, t_max=12.0, nets=nets)

# 训练

solver.fit(max_epochs=3000)

# 训练完成后查看任意点的值,给定t=np.arange(0.1,20,0.2)用训练好的ANN求解出对应的u与v

solution = solver.get_solution()

test_t = np.arange(0.1,20,0.2).tolist()

value_at_points = solution(test_t)

test_u,test_v = value_at_points[0].tolist(), value_at_points[1].tolist()

# u = solution(x, y, to_numpy=True)

# 绘图

plt.plot(test_t,test_u,label='prey')

plt.plot(test_t,test_v,label='predator')

plt.legend()

没有其余方法来对照,但可以大概看出在训练范围内![t \in [0.1,12]](http://style.iis7.com/uploads/2021/06/0807262761.gif) ,求出来的值是对的,超出以后就误差很大了。

,求出来的值是对的,超出以后就误差很大了。

原理:已知问题(ODE与初值条件),用数值求解得出训练数据,用训练数据训练ANN,带入新的自变量进行训练好的ANN求解出值

neurodiffeq模块介绍

neurodiffeq.neurodiffeq:求导

neurodiffeq.networks:神经网络

neurodiffeq.conditions:初始条件与边界条件

neurodiffeq.solvers:求解

neurodiffeq.monitors:在训练过程中监测

neurodiffeq.ode

neurodiffeq.pde

neurodiffeq.pde_spherical

neurodiffeq.temporal

neurodiffeq.function_basis

neurodiffeq.generators

neurodiffeq.operators

neurodiffeq.callbacks

neurodiffeq.utils

原理

![min[(\widehat{u}_{xx} + a\widehat{u}_x -b)^2+ (\widehat{u}(0) - u_0)^2 + (\widehat{u}(1) - u_1)^2]](http://style.iis7.com/uploads/2021/06/0807152750.gif)

,神经网络输入为

,神经网络输入为 ,输出为

,输出为 。

。

![min[(w_2f''(w_1x) + aw_2f'(w_1x) -b)^2+ (w_2f(0) - u_0)^2 + (w_2f(1) - u_1)^2]](http://style.iis7.com/uploads/2021/06/0807212756.gif)

![[(w_2f''(w_1x) + aw_2f'(w_1x) -b)^2+ (w_2f(0) - u_0)^2 + (w_2f(1) - u_1)^2]\rightarrow 0](http://style.iis7.com/uploads/2021/06/0807222757.gif)

![t \in [0.1,12]](http://style.iis7.com/uploads/2021/06/0807262761.gif) ,求出来的值是对的,超出以后就误差很大了。

,求出来的值是对的,超出以后就误差很大了。