#if !(PLATFORM_LUMIN && !UNITY_EDITOR)

using OpenCVForUnity.CoreModule;

using OpenCVForUnity.FaceModule;

using OpenCVForUnity.ImgprocModule;

using OpenCVForUnity.ObjdetectModule;

using OpenCVForUnity.UnityUtils;

using OpenCVForUnity.UnityUtils.Helper;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.SceneManagement;

using UnityEngine.UI;

using Rect = OpenCVForUnity.CoreModule.Rect;

namespace OpenCVForUnityExample

{

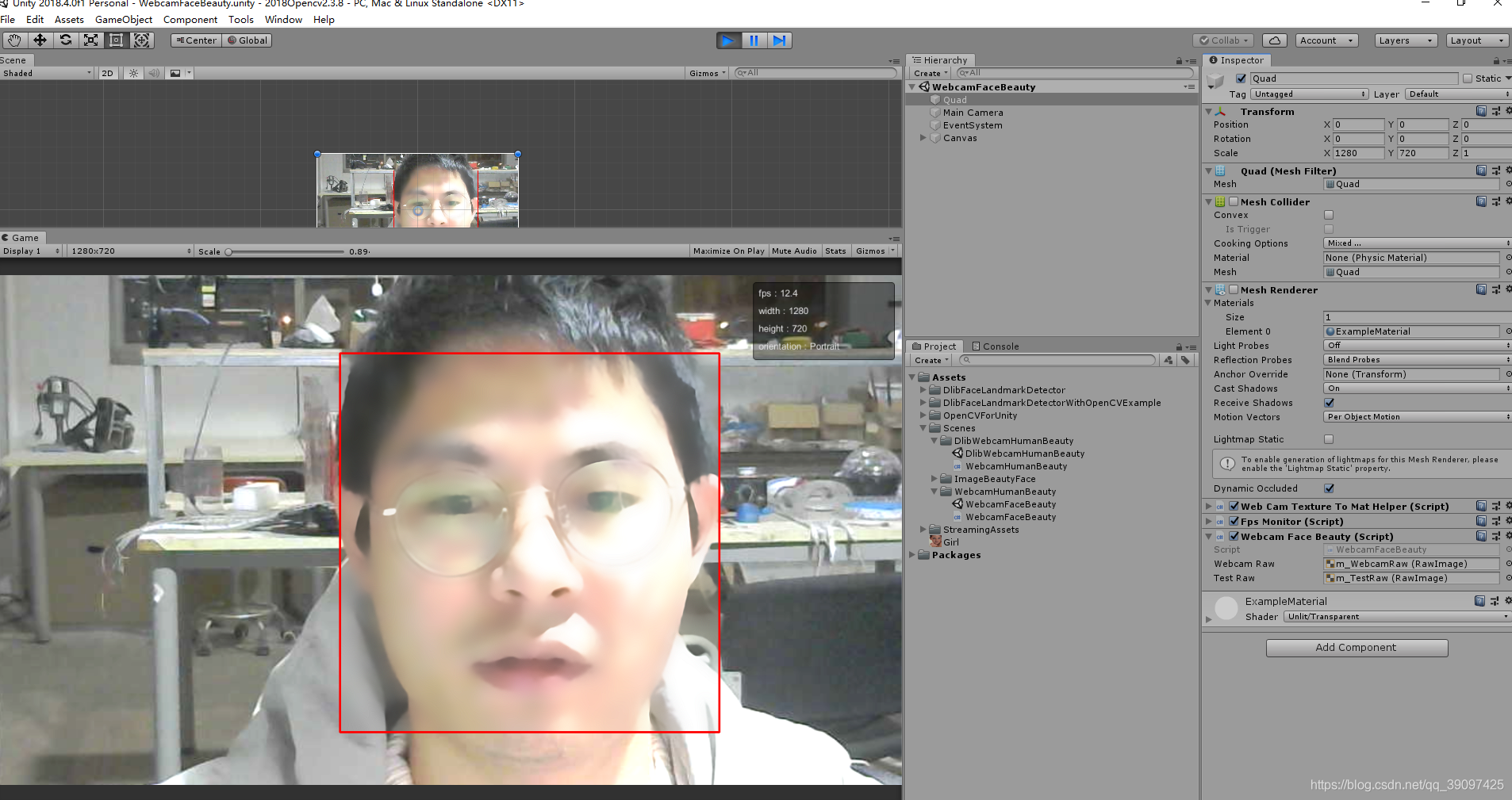

/// <summary>

/// FaceMark Example

/// An example of detecting facial landmark in a image of WebCamTexture using the face (FaceMark API) module.

/// The facemark model file can be downloaded here: https://github.com/spmallick/GSOC2017/blob/master/data/lbfmodel.yaml

/// Please copy to “Assets/StreamingAssets/facemark/” folder.

/// </summary>

[RequireComponent(typeof(WebCamTextureToMatHelper))]

public class WebcamFaceBeauty : MonoBehaviour

{

/// <summary>

/// The gray mat.

/// </summary>

private Mat grayMat;

/// <summary>

/// The texture.

/// </summary>

private Texture2D texture;

/// <summary>

/// The cascade.

/// </summary>

private CascadeClassifier cascade;

/// <summary>

/// The faces.

/// </summary>

private MatOfRect faces;

/// <summary>

/// The webcam texture to mat helper.

/// </summary>

private WebCamTextureToMatHelper webCamTextureToMatHelper;

/// <summary>

/// The facemark.

/// </summary>

private Facemark facemark;

/// <summary>

/// The FPS monitor.

/// </summary>

private FpsMonitor fpsMonitor;

/// <summary>

/// FACEMARK_CASCADE_FILENAME

/// </summary>

protected static readonly string FACEMARK_CASCADE_FILENAME = "lbpcascade_frontalface.xml";

/// <summary>

/// The facemark cascade filepath.

/// </summary>

private string facemark_cascade_filepath;

/// <summary>

/// FACEMARK_CASCADE_FILENAME

/// </summary>

protected static readonly string FACEMARK_MODEL_FILENAME = "facemark/lbfmodel.yaml";

/// <summary>

/// The facemark model filepath.

/// </summary>

private string facemark_model_filepath;

#if UNITY_WEBGL && !UNITY_EDITOR

IEnumerator getFilePath_Coroutine;

#endif

// Use this for initialization

private void Start()

{

fpsMonitor = GetComponent<FpsMonitor>();

webCamTextureToMatHelper = gameObject.GetComponent<WebCamTextureToMatHelper>();

#if UNITY_WEBGL && !UNITY_EDITOR

getFilePath_Coroutine = GetFilePath();

StartCoroutine(getFilePath_Coroutine);

#else

facemark_cascade_filepath = Utils.getFilePath(FACEMARK_CASCADE_FILENAME);

facemark_model_filepath = Utils.getFilePath(FACEMARK_MODEL_FILENAME);

Run();

#endif

}

#if UNITY_WEBGL && !UNITY_EDITOR

private IEnumerator GetFilePath()

{

var getFilePathAsync_0_Coroutine = Utils.getFilePathAsync(FACEMARK_CASCADE_FILENAME, (result) =>

{

facemark_cascade_filepath = result;

});

yield return getFilePathAsync_0_Coroutine;

var getFilePathAsync_1_Coroutine = Utils.getFilePathAsync(FACEMARK_MODEL_FILENAME, (result) =>

{

facemark_model_filepath = result;

});

yield return getFilePathAsync_1_Coroutine;

getFilePath_Coroutine = null;

Run();

}

#endif

public RawImage m_WebcamRaw;

// Use this for initialization

private void Run()

{

if (string.IsNullOrEmpty(facemark_cascade_filepath) || string.IsNullOrEmpty(facemark_model_filepath))

{

Debug.LogError("model file is not loaded. The facemark model file can be downloaded here: https://github.com/spmallick/GSOC2017/blob/master/data/lbfmodel.yaml\n Please copy to “Assets/StreamingAssets/facemark/” folder. ");

}

// setup landmarks detector

facemark = Face.createFacemarkLBF();

facemark.loadModel(facemark_model_filepath);

// setup face detection

cascade = new CascadeClassifier(facemark_cascade_filepath);

if (cascade.empty())

{

Debug.LogError("cascade file is not loaded. Please copy from “OpenCVForUnity/StreamingAssets/” to “Assets/StreamingAssets/” folder. ");

}

#if UNITY_ANDROID && !UNITY_EDITOR

// Avoids the front camera low light issue that occurs in only some Android devices (e.g. Google Pixel, Pixel2).

webCamTextureToMatHelper.avoidAndroidFrontCameraLowLightIssue = true;

#endif

webCamTextureToMatHelper.Initialize();

}

/// <summary>

/// Raises the webcam texture to mat helper initialized event.

/// </summary>

public void OnWebCamTextureToMatHelperInitialized()

{

Debug.Log("OnWebCamTextureToMatHelperInitialized");

Mat webCamTextureMat = webCamTextureToMatHelper.GetMat();

texture = new Texture2D(webCamTextureMat.cols(), webCamTextureMat.rows(), TextureFormat.RGBA32, false);

Utils.fastMatToTexture2D(webCamTextureMat, texture);

//gameObject.GetComponent<Renderer>().material.mainTexture = texture;

m_WebcamRaw.texture = texture;

gameObject.transform.localScale = new Vector3(webCamTextureMat.cols(), webCamTextureMat.rows(), 1);

Debug.Log("Screen.width " + Screen.width + " Screen.height " + Screen.height + " Screen.orientation " + Screen.orientation);

if (fpsMonitor != null)

{

fpsMonitor.Add("width", webCamTextureMat.width().ToString());

fpsMonitor.Add("height", webCamTextureMat.height().ToString());

fpsMonitor.Add("orientation", Screen.orientation.ToString());

}

float width = webCamTextureMat.width();

float height = webCamTextureMat.height();

float widthScale = (float)Screen.width / width;

float heightScale = (float)Screen.height / height;

if (widthScale < heightScale)

{

Camera.main.orthographicSize = (width * (float)Screen.height / (float)Screen.width) / 2;

}

else

{

Camera.main.orthographicSize = height / 2;

}

grayMat = new Mat(webCamTextureMat.rows(), webCamTextureMat.cols(), CvType.CV_8UC1);

faceMat = new Mat(0, 0, CvType.CV_8UC4);

size = new Size(9, 9);

faces = new MatOfRect();

kernel = new Mat(3, 3, CvType.CV_32F, new Scalar(-1));

kernel.put(0, 0, 0, -1, 0, -1, 5, -1, 0, -1, 0);

}

/// <summary>

/// Raises the webcam texture to mat helper disposed event.

/// </summary>

public void OnWebCamTextureToMatHelperDisposed()

{

Debug.Log("OnWebCamTextureToMatHelperDisposed");

if (grayMat != null)

grayMat.Dispose();

if (texture != null)

{

Texture2D.Destroy(texture);

texture = null;

}

if (faces != null)

faces.Dispose();

}

/// <summary>

/// Raises the webcam texture to mat helper error occurred event.

/// </summary>

/// <param name="errorCode">Error code.</param>

public void OnWebCamTextureToMatHelperErrorOccurred(WebCamTextureToMatHelper.ErrorCode errorCode)

{

Debug.Log("OnWebCamTextureToMatHelperErrorOccurred " + errorCode);

}

private Mat bilateralFilterMat;

private Mat kernel;

private Mat faceMat; //脸部Mat

private Size size; // 高斯模糊参数

// 测试参数

public RawImage m_TestRaw;

private Texture2D _TestTexture;

// Update is called once per frame

private void Update()

{

if (webCamTextureToMatHelper.IsPlaying() && webCamTextureToMatHelper.DidUpdateThisFrame())

{

Mat rgbaMat = webCamTextureToMatHelper.GetMat();

rgbaMat.convertTo(rgbaMat, -1, 1.1f, 30);

Imgproc.cvtColor(rgbaMat, grayMat, Imgproc.COLOR_RGBA2GRAY);

//equalizeHist 直方图均衡化

//函数功能:直方图均衡化,该函数能归一化图像亮度和增强对比度

Imgproc.equalizeHist(grayMat, grayMat);

// detect faces

cascade.detectMultiScale(grayMat, faces, 1.1, 2, 2, // TODO: objdetect.CV_HAAR_SCALE_IMAGE

new Size(grayMat.cols() * 0.2, grayMat.rows() * 0.2), new Size());

if (faces.total() > 0)

{

// fit landmarks for each found face

List<MatOfPoint2f> landmarks = new List<MatOfPoint2f>();

facemark.fit(grayMat, faces, landmarks);

Rect[] rects = faces.toArray();

Rect[] rects1 = faces.toArray();

if (rects.Length == 1)

{

适当放大 脸部矩形

//if (rects[0].x - 50 >= 0)

// rects[0].x -= 50;

//if (rects[0].y - 50 >= 0)

// rects[0].y -= 50;

//rects[0].width += 50;

//rects[0].height += 50;

// 处理脸部区域 这是引用 这种创建是一种引用

faceMat = new Mat(rgbaMat, rects[0]);

// 高斯模糊

Imgproc.GaussianBlur(faceMat, faceMat, size, 0, 0);

// 双边滤波

Imgproc.cvtColor(faceMat, faceMat, Imgproc.COLOR_RGB2BGR);

bilateralFilterMat = new Mat(faceMat.cols(), faceMat.rows(), CvType.CV_8UC3);

Imgproc.cvtColor(bilateralFilterMat, bilateralFilterMat, Imgproc.COLOR_RGB2BGR);

Imgproc.bilateralFilter(faceMat, bilateralFilterMat, 30, 75, 75);

// 这个地方很重要 要把 bgr 转回 rgba

Imgproc.cvtColor(bilateralFilterMat, bilateralFilterMat, Imgproc.COLOR_BGR2RGBA);

//锐化 对图像srcMat和自定义核kernel做卷积,输出到dstMat

Imgproc.filter2D(bilateralFilterMat, bilateralFilterMat, bilateralFilterMat.depth(), kernel);

Mat bgmat = new Mat(rgbaMat, rects[0]);

bilateralFilterMat.copyTo(bgmat);

//_TestTexture = new Texture2D(bilateralFilterMat.cols(), bilateralFilterMat.rows(), TextureFormat.BGRA32, false);

//Utils.matToTexture2D(bilateralFilterMat, _TestTexture);

//m_TestRaw.texture = _TestTexture;

//m_TestRaw.SetNativeSize();

}

for (int i = 0; i < rects.Length; i++)

{

//Debug.Log ("detect faces " + rects [i]);

Imgproc.rectangle(rgbaMat, new Point(rects[i].x, rects[i].y), new Point(rects[i].x + rects[i].width, rects[i].y + rects[i].height), new Scalar(255, 0, 0, 255), 2);

}

draw them

//for (int i = 0; i < landmarks.Count; i++)

//{

// MatOfPoint2f lm = landmarks[i];

// float[] lm_float = new float[lm.total() * lm.channels()];

// MatUtils.copyFromMat<float>(lm, lm_float);

// DrawFaceLandmark(rgbaMat, ConvertArrayToPointList(lm_float), new Scalar(0, 255, 0, 255), 2);

// //for (int j = 0; j < lm_float.Length; j = j + 2)

// //{

// // Point p = new Point(lm_float[j], lm_float[j + 1]);

// // Imgproc.circle(rgbaMat, p, 2, new Scalar(255, 0, 0, 255), 1);

// //}

//}

}

Utils.fastMatToTexture2D(rgbaMat, texture);

}

}

private bool cvAdd4cMat_q(Mat dst, Mat scr, double scale)

{

if (dst.channels() != 3 || scr.channels() != 4)

{

return true;

}

if (scale < 0.01)

return false;

List<Mat> scr_channels = new List<Mat>();

List<Mat> dstt_channels = new List<Mat>();

Core.split(scr, scr_channels);

Core.split(dst, dstt_channels);

// CV_Assert(scr_channels.size() == 4 && dstt_channels.size() == 3);

if (scale < 1)

{

scr_channels[3] *= scale;

scale = 1;

}

for (int i = 0; i < 3; i++)

{

dstt_channels[i] = dstt_channels[i].mul(new Mat(scr_channels[3].size(), CvType.CV_8UC1, new Scalar(255.0 / scale)) - scr_channels[3], scale / 255.0);

dstt_channels[i] += scr_channels[i].mul(scr_channels[3], scale / 255.0);

}

Core.merge(dstt_channels, dst);

return true;

}

private void DrawFaceLandmark(Mat imgMat, List<Point> points, Scalar color, int thickness, bool drawIndexNumbers = false)

{

if (points.Count == 5)

{

Imgproc.line(imgMat, points[0], points[1], color, thickness);

Imgproc.line(imgMat, points[1], points[4], color, thickness);

Imgproc.line(imgMat, points[4], points[3], color, thickness);

Imgproc.line(imgMat, points[3], points[2], color, thickness);

}

else if (points.Count == 68)

{

for (int i = 1; i <= 16; ++i)

Imgproc.line(imgMat, points[i], points[i - 1], color, thickness);

for (int i = 28; i <= 30; ++i)

Imgproc.line(imgMat, points[i], points[i - 1], color, thickness);

for (int i = 18; i <= 21; ++i)

Imgproc.line(imgMat, points[i], points[i - 1], color, thickness);

for (int i = 23; i <= 26; ++i)

Imgproc.line(imgMat, points[i], points[i - 1], color, thickness);

for (int i = 31; i <= 35; ++i)

Imgproc.line(imgMat, points[i], points[i - 1], color, thickness);

Imgproc.line(imgMat, points[30], points[35], color, thickness);

for (int i = 37; i <= 41; ++i)

Imgproc.line(imgMat, points[i], points[i - 1], color, thickness);

Imgproc.line(imgMat, points[36], points[41], color, thickness);

for (int i = 43; i <= 47; ++i)

Imgproc.line(imgMat, points[i], points[i - 1], color, thickness);

Imgproc.line(imgMat, points[42], points[47], color, thickness);

for (int i = 49; i <= 59; ++i)

Imgproc.line(imgMat, points[i], points[i - 1], color, thickness);

Imgproc.line(imgMat, points[48], points[59], color, thickness);

for (int i = 61; i <= 67; ++i)

Imgproc.line(imgMat, points[i], points[i - 1], color, thickness);

Imgproc.line(imgMat, points[60], points[67], color, thickness);

}

else

{

for (int i = 0; i < points.Count; i++)

{

Imgproc.circle(imgMat, points[i], 2, color, -1);

}

}

// Draw the index number of facelandmark points.

if (drawIndexNumbers)

{

for (int i = 0; i < points.Count; ++i)

Imgproc.putText(imgMat, i.ToString(), points[i], Imgproc.FONT_HERSHEY_SIMPLEX, 0.5, new Scalar(255, 255, 255, 255), 1, Imgproc.LINE_AA, false);

}

}

private List<Point> ConvertArrayToPointList(float[] arr, List<Point> pts = null)

{

if (pts == null)

{

pts = new List<Point>();

}

if (pts.Count != arr.Length / 2)

{

pts.Clear();

for (int i = 0; i < arr.Length / 2; i++)

{

pts.Add(new Point());

}

}

for (int i = 0; i < pts.Count; ++i)

{

pts[i].x = arr[i * 2];

pts[i].y = arr[i * 2 + 1];

}

return pts;

}

/// <summary>

/// Raises the destroy event.

/// </summary>

private void OnDestroy()

{

webCamTextureToMatHelper.Dispose();

if (cascade != null)

cascade.Dispose();

if (facemark != null)

facemark.Dispose();

#if UNITY_WEBGL && !UNITY_EDITOR

if (getFilePath_Coroutine != null)

{

StopCoroutine(getFilePath_Coroutine);

((IDisposable)getFilePath_Coroutine).Dispose();

}

#endif

}

/// <summary>

/// Raises the back button click event.

/// </summary>

public void OnBackButtonClick()

{

SceneManager.LoadScene("OpenCVForUnityExample");

}

/// <summary>

/// Raises the play button click event.

/// </summary>

public void OnPlayButtonClick()

{

webCamTextureToMatHelper.Play();

}

/// <summary>

/// Raises the pause button click event.

/// </summary>

public void OnPauseButtonClick()

{

webCamTextureToMatHelper.Pause();

}

/// <summary>

/// Raises the stop button click event.

/// </summary>

public void OnStopButtonClick()

{

webCamTextureToMatHelper.Stop();

}

/// <summary>

/// Raises the change camera button click event.

/// </summary>

public void OnChangeCameraButtonClick()

{

webCamTextureToMatHelper.requestedIsFrontFacing = !webCamTextureToMatHelper.IsFrontFacing();

}

}

}

#endif

?

cs